Integrate AI Into Your Backend with Huggingface API.

Backend developers can integrate AI models seamlessly with the Hugging Face Inference API, accessing diverse models. Emphasizing API usage over algorithm details, 2024 is predicted as the year of AI-integrated apps, urging developers to master these tools.

If you're a back-end developer you can already integrate AI algorithms into your code without any in-depth knowledge of how these algorithms work.

With the Hugging Face Inference API, you have free access to tons of AI Algorithms for your various use cases.

If you missed out on the AI revolution in 2023, you're still early because you're reading this right now. 2024 is the year of AI-integrated apps. As a backend developer, you should understand how to use these APIs as and when needed.

What is Hugging Face?

It is a platform where anyone can upload their AI models and have APIs generated on top of them automatically. Think of it like GitHub for AI models.

Big organizations like Facebook, Microsoft, and Google, have uploaded several AI models there that you can access right now.

This is already amazing. No need to understand the Algorithms, just understand how to use the hugging face API and you're all set. As long as the customer is happy, we're happy.

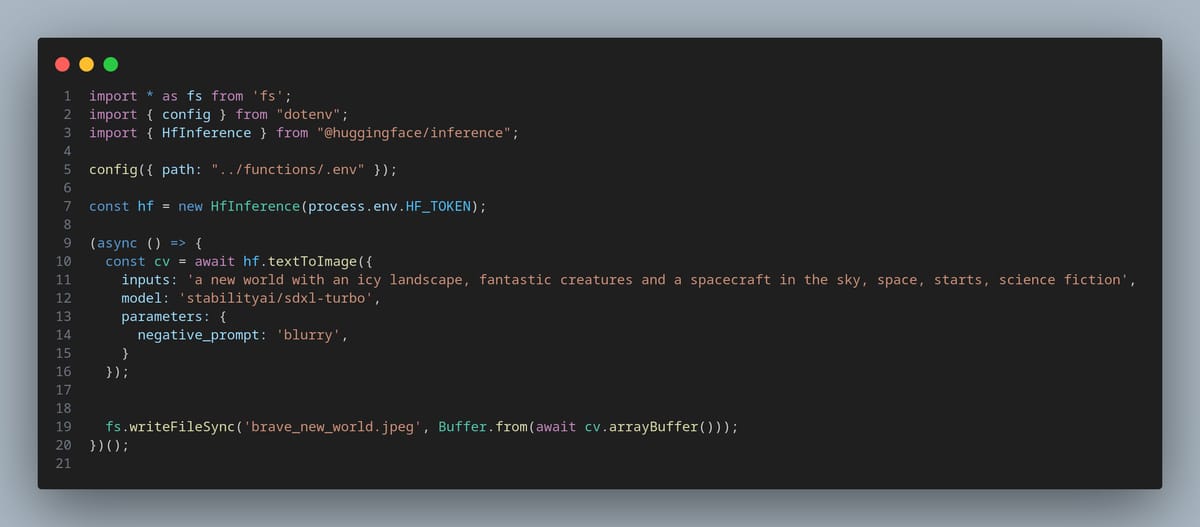

You're already a developer so I'm not going to explain code here. Visit their documentation to get started https://huggingface.co/docs/huggingface.js/index. Don't hesitate to reach out to me if you need specific help or have questions.

Integration Best Practices.

A few comments about integrating AI models into your backend. The important point to note is that don't combine the request and response in the same endpoint. The reason is that most of these AI models take a little bit of time to complete running.

You might want to have two endpoints, one to trigger a queue job on the AI model and another to check the status of the job and any result thereof. Of course, there are many ways to do this, if you want to be a bit fancy you could go with the WebSockets approach. In my opinion that might be overkill for most applications, long polling is just enough.

Some of the models are also very fast, so you could have a blended approach of jobs and immediate response depending on the model being triggered.